Abstract: This article delves into the advanced classification capabilities of the Hidden Markov Model (HMM) to discern distinct states of the market stress indicator, inspired by the ‘QF-Lib’ approach. We will harness Python libraries—fredapi and yfinance—to facilitate data acquisition and numpy; hmmlearn for computation.

In the K-Means classification article (K-Means Algorithm to Detect the Current Market Regime) I have covered some key classification algorithm characteristics. Today I will be digging deeper into a more advanced classification model known as a Hidden Markov Model. I will be using its powers to classify different states of the ‘QF-Lib’ inspired market stress indicator, which I have optimized in respect to the simplicity of fetching data, needed to calculate it using two python libraries- fredapi (FRED API wrapper) and yfinance (Yahoo Finance wrapper).

A Markov Model assumes that the state of a system at a given time is dependent solely on the state of the system at the previous time. In other words, the probability of an event happening right now is determined only by the event that just occurred. Using weather as an example: if yesterday was very windy, then based on the Markov Model, we can predict today’s weather based on that single observation from yesterday. If historically windy days are followed by rainy days, then we can estimate that today might be rainy, given that yesterday was windy. This seems to be the meteorological trend observed in Lithuania.

However, a Hidden Markov Model (HMM) introduces an additional layer of complexity. In an HMM, the actual state of the system (e.g., windy, sunny, rainy) is not directly observable. Instead, we see certain outcomes or observations that are influenced by these hidden states. So, using the weather analogy, let’s say we don’t directly observe whether it’s windy, sunny, or rainy. Instead, we might observe people carrying umbrellas. From this observation, we can infer that it might be rainy, but we’re not certain. The “hidden” in HMM refers to these unobserved states (e.g., windy, sunny, rainy), and we use the observable data (e.g., people with umbrellas) to make inferences about these hidden states.

In the context of Lithuanian weather patterns, if we only know that “today is cloudy” (an observation) but don’t know the actual weather conditions from yesterday (the hidden state), we can use an HMM to estimate the most likely weather condition from yesterday and the probable transition to today’s observation. Graphically, it will look like the chain showcased in the Fig. 1.

When classifying the market states, our primary insights are often derived from observable indicators. The indicators are high, volatility is increasing and so forth. But it is not possible to see the state itself, it is only possible to make wild guesses about the current market state.

One methodology that has resonated with my analytical approach is the ‘QF-Lib’ framework, as illustrated in the demonstration script (market_stress_indicator_demo.py (github.com)). It argues that market stress can be divided into three separate components: Volatility, Bond Spreads and Liquidity. Then authors proceed to take the Z score of each component and create a weighted average, which is the quantified value, called “Market Stress Indicator”. My intention in this discourse is to value the market stress indicator values as the observations of the market state and then try to predict the next most likely market state.

However, because the script is relatively old and is constructed from exotic indexes, it is impossible to use it out-of-the-box. For example- TED Spread has been discontinued for years. We must modify the logic.

Original Indexes:

- Volatility

- VIX # US Equity

- MOVE # US Bonds

- JPMVXYGL # Global FX

- Corporate Spreads

- CSI BARC # HY

- CSI BBB # BBB

- CSI A # A

- Liquidity

- BASPTDSP # Ted Spread

weights = [1, 1, 1, 1, 1, 1, 3]

Problem:

TED Spread is no longer existent, and it also has 3x weight compared to the other ones; Global FX index is unreachable.

Solution:

Dollar is the world’s reserve currency, currently having a strong lead. If we can calculate the volatility of the dollar, we will have an excellent estimate for the global FX volatility.

Additionally, Euro being the top 2 is a great pair for the Dollar. Calculating the trailing ATR of EUR/USD will give an estimate of volatility for about 80% of the worlds most traded currencies. ATR is calculated as follows:

The liquidity is an article on it’s own. Since there is no “official” way of calculating the liquidity, the approach can be taken to separate the TED Spread into three different components:

FED FUNDS Rate. The smaller the rate, the more money pours into the economy, thus suiting our goal. Problem, however, is that changes are usually minimal and extremely slow.

10Y – 2Y Spread. Showcases the current market health and is by far the most important indicator about how the investors see the market. An inverted curve would mean that the investors are pricing in a “heavy” market in the near future, by choosing to hold short maturity instruments as opposed to the longer-term ones.

Liquidity Indicator on SPY. The idea about the liquidity indicator is taken from the CFA article (A Practical Approach to Liquidity Calculation (cfainstitute.org). Authors argue that liquidity can be answered with the question: “What amount of money is needed to create a daily single unit price fluctuation of the stock?”. The formula can be re-created like this:

Where:

L = Liquidity

V = Volume Traded

C = Closing Price

R = Range (High – Low)

p = of previous day

With all these 4 indicators done, we prepare the dataset by computing the rolling mean and standard deviation for each indicator. Then we compute the Z score. Given that the data is in a pd.DataFrame format, the python code is as follows:

# Extremely important: first separate training data from prediction data

tr_data: list = ['2005-01-01', '2015-01-01'],

pr_data: list = ['2015-01-01', '2023-01-01']

<...>

# Compute rolling mean and standard deviation

roll_means_training = training_data.rolling(window=window).mean()

roll_means_prediction = prediction_data.rolling(window=window).mean()

roll_std_devs_training = training_data.rolling(window=window).std()

roll_std_devs_prediction = prediction_data.rolling(window=window).std()

# Compute Z-scores

training_data_Z = (

(training_data - roll_means_training) / roll_std_devs_training

)

prediction_data_Z = (

(prediction_data - roll_means_prediction) / roll_std_devs_prediction

)Afterwards, continue by dropping the NaN values and scaling the data so it has a mean of 0 and variance of 1. After this is done, run the BIC analysis to determine the best number of the components. See Fig. 5 below:

Y is the error (so the smaller the better) while X is the number of components, starting from 0. I chose the number 6 as the optimal number of components (hence 7, as it is starting from 0)

Fig. 5 – Bayesian Information Criterion (BIC) Chart

After this is done, train the HMM model 50 times and choose the one with the smallest error. This is done because the data has a large number of local optima and each time the model is trained, it converges to a different point. I want to choose the model, that converges to the best local optima.

HMM model will return the np.ndarray filled with state values, however no index. For visual purposes, see below:

Predicted_Values = [1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 2, 2, 2, 2, 2, 2, 0, 0, 0, 0, 0, 0, 0, 2, 2, 2, 2, 2, 2, 5, 5, 5, 5, 5, 5...]Next step is to match these market state values to the data frame filled with indicators and market prices; dates. Since the lengths are the same, that is simple to do.

By creating a chart for each market state (7 in total) we get visual, which look like the one shown in Fig. 6

Next step is to proceed to manually classify the states into “Bear”, “Bull” or “Neutral” by how the graphs look. For example: state 0, 3 and 5 are the bull market states. State 1 can be classified as a bear market since it captured a drop from +10% to -15% percentage in total return. State 2 and 4, can be thought of more red than yellow. The sixth appears bullish, though for the first half it rose and then fell, afterwards continuing to have a relatively choppy action, hence it will be classified as yellow.

A one way to do this automatically is to apply DTW analysis and match the straight, upwards, downwards trending lines and then check which had the smallest error. That will yield the classification. While this method works well most of the time, classification is too important to leave it as it is, it is preferred to do this manually than automatically. After the market is classified into the different states, continue to make a very crowded graph purely for visual purposes. See Fig. 7.

But how to actually backtest or even trade this strategy? To classify the market state, HMM needs the period (a week in our case) to close, so it can classify the state. Decision cannot be made on-the-spot. Trading on a classification, that was made for the last week sounds awful. Or is it?

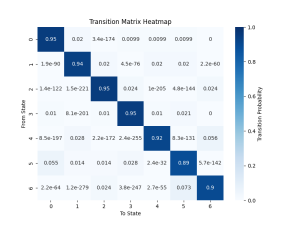

One of the data structures that the HMM returns is a transition probability matrix, which describes the probability of jumping to another state from the current one. For example, if the current market state is number 4, then from here, it might have a 20% chance of jumping into the state number 5, 60% probability of jumping into the state number 1 and 20% probability of jumping to the state 0 (other states give 0%). With this logic, on Friday, we can calculate this week’s market state and then forecast the state of the next week, looking where we have the biggest probability to jumping to for the next week. We can visualize this with a heat map. See Fig. 8.

This is interesting as it shows that HMM expects the next week to be… the same as the one before, for more than 90% of the time! And this makes sense as the market states don’t change that quick. This is because all indicators in the market stress indicator are calculated on a weekly basis (with some being calculated on a quarterly basis!), so HMM does not expect the market states to jump around all the time, hence for the backtest, we can simply go long 100% if the last weeks’ state was bullish, go with 60% if the last weeks’ state was bullish and go with 30% if the last week’s state was bearish. Backtest is showcased below as a Fig. 9.

- Buy&Hold

- Max Drawdown: 32.23%

- Return: 84.36%

- Strategy

- Max Drawdown: 18.49%

- Return: 109.39%

Note that this backtest does not introduce forward looking bias, as the model was trained, and its states were classified on the 2005 to 2015 data and the backtest was conducted on the 2015 to 2023 data.

Ideally, model should be re-trained more often. Best after a shift in the market. For example, a large movement or a FED statement, virus outbreak or other, so that the model takes into account the latest data. Lastly, the states of the HMM can be used to train other models or at least check their accuracy.

To conclude:

HMM is a powerful tool to classify the states of the market. paired with a concrete indicator and it can deliver results. Of course, it is not perfect by any means. The biggest problem is the fact that its predictive power lies in the event that has already happened (last week), thus it is always acting on the past information.

Leave a Reply